Looking for the best AI tools to sync audio with video seamlessly? Here’s a quick guide to the top options in 2025.

These tools save time and improve video quality by aligning speech with visuals. Whether you’re dubbing movies, creating multilingual content, or producing marketing videos, these AI tools can handle it all.

Top 5 AI Lip Sync Tools:

- VisualDub by NeuralGarage: Supports 35+ languages delivering studio quality output that preserves original facial features and speaking style.

- Syncmonster.ai: Offers voice cloning, supports multiple languages, and excels at syncing multiple speakers in one video.

- Sync.so: API-driven tool for developers; does fast lipsync in multiple languages.

- HeyGen: Multilingual support for many languages with customizable AI avatars and real-time lip-syncing.

- Hyra: Focused on fast, real-time synchronization for streamlined workflows.

Quick Comparison Table

| Feature | VisualDub | Syncmonster.ai | Sync.so | HeyGen | Hyra |

|---|---|---|---|---|---|

| Language Support | 35+ | Major langs | Major langs | Major langs | Limited |

| Real-Time Processing | No | No | Yes | No | No |

| Multi-Speaker Sync | Yes | Yes | No | No | No |

| Emotion Preservation | Yes | No | No | Yes | No |

| Best For | Film/Ads | Digital advertisers/Creators | Developers | Creators | Quick Edits |

These tools are reshaping video production, cutting costs by up to 40% and saving time by 50%. Choose based on your needs – whether it’s multilingual dubbing, real-time processing, or emotion preservation.

AI Lip Sync Battle – 6 Tools Put To The Test!

1. VisualDub by NeuralGarage

VisualDub, created by NeuralGarage, uses generative AI to deliver studio quality lip sync that aligns seamlessly with audio input. Developed entirely in-house, this platform has already processed over 1.5 million seconds of video and trained on more than 1 billion data points.

The tool specializes in perfectly matching existing video with new audio, creating a natural, polished dubbed effect. Big names like Coca-Cola, Amazon, Loreal, Nestle, HP, etc. have already started using VisualDub to make their creatives look seamless across languages.

What makes VisualDub stand out is its ability to replace dialogue without requiring expensive reshoots. It also scales personalized video messaging while maintaining high visual quality and storytelling integrity. These core features set the stage for its impressive multi-lingual capabilities.

Language Support and Multi-lingual Capabilities

VisualDub supports over 35 languages, including German, Spanish, and Mandarin, and works without being tied to specific faces or languages. Its real strength lies in phoneme-level adaptation – analyzing the smallest sound units to ensure lip and facial movements align perfectly for each language.

"VisualDub will allow you to shoot in one language and recreate it authentically in every language on earth without making it look like dubbed content."

– Mandar Natekar, Co-founder & CEO, NeuralGarage

This feature is a game-changer for brands expanding globally. NeuralGarage’s collaboration with Amazon India is a prime example of how VisualDub is helping companies bridge language barriers.

Facial Expression and Emotion Preservation

Using its VisualDub technology, NeuralGarage ensures that authentic facial expressions remain intact during the lip sync process. It carefully preserves details like teeth placement, facial hair, skin tone, and texture – even in challenging scenarios involving side profiles or multiple speakers.

"Our technology delivers precise lip sync with top-tier visual fidelity and timing."

– Subhabrata Debnath, Co-Founder and CTO, NeuralGarage

Guided by Subhabrata Debnath, an expert in computer vision and generative models, VisualDub is designed to handle diverse conditions, such as varied angles, lighting, and head movements, all while maintaining a visually accurate and lifelike appearance.

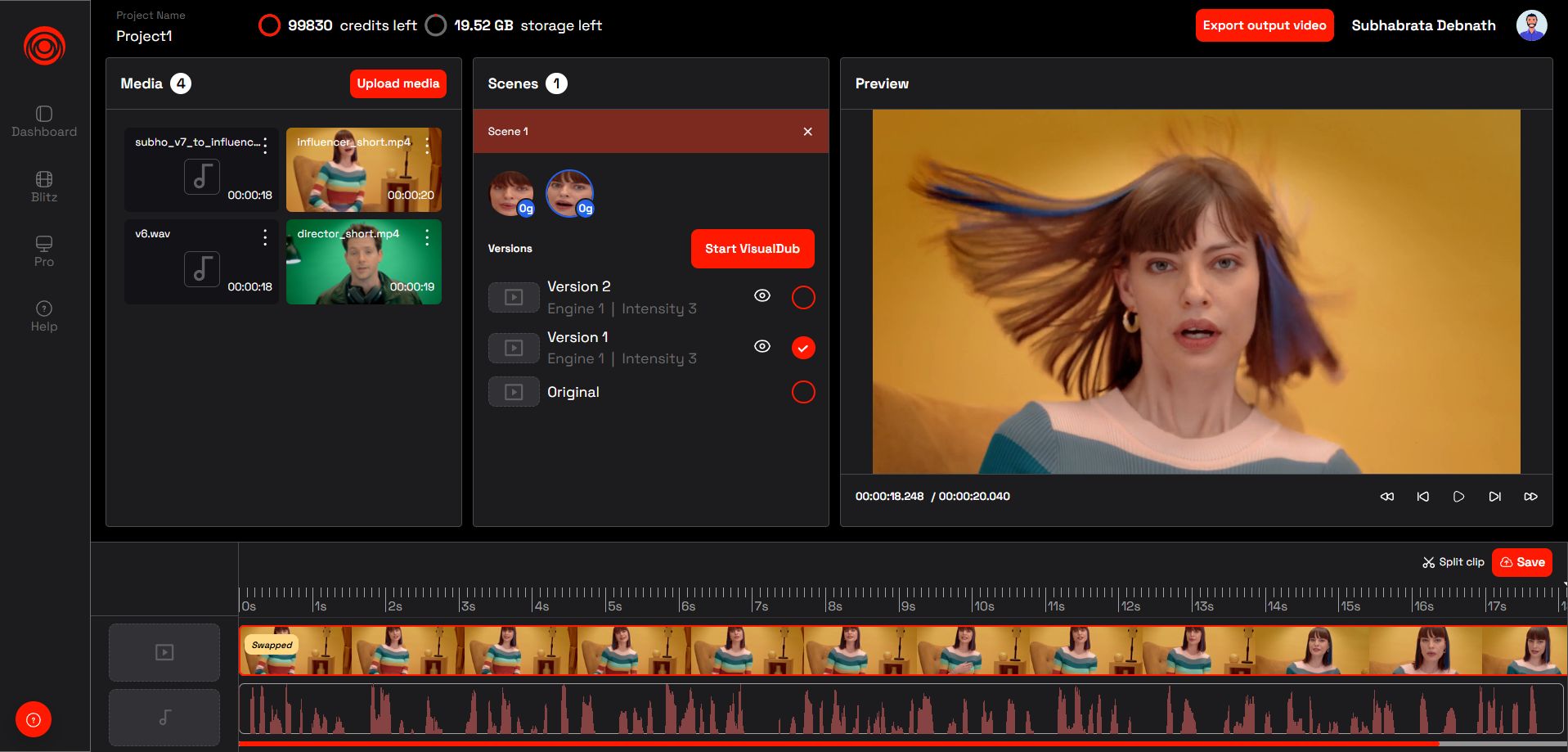

2. Syncmonster.ai

Recently launched, SyncMonster AI has quickly distinguished itself as an exceptionally user-friendly platform, offering numerous distinctive controls for precise lip-sync. By uniting state-of-the-art AI dubbing and voice cloning with advanced lip-sync technology, it delivers a formidable solution for worldwide content localization. Explore its standout capabilities and advantages for creators and studios everywhere.

Among SyncMonster AI’s most compelling innovations is SyncBoost, a feature that allows users to fine-tune the amount of lip synchronization on a frame-by-frame basis. Departing from generic translation tools, SyncBoost generates dubbed videos in which mouth movements align flawlessly with the replacement audio, matching each project’s unique demands. The platform also supports scenes containing multiple speakers and accommodates an extensive spectrum of languages and regional dialects, ensuring truly global compatibility for all audiences.

"This is a revolution that literally transforms the Digital Advertising landscape." – Sukrit Garg, Category Head, Leo Burnett.

The platform integrates lip-sync capabilities directly into its Pro interface, allowing users to apply lip-syncing to specific sections of a video instead of the entire clip. This flexibility saves time and processing resources. Combined with its broad language compatibility, Syncmonster AI is a go-to solution for digital agencies and content creators and businesses alike.

Language Support and Multi-lingual Capabilities

Syncmonster AI supports translation in over 30+ languages. It handles everything from widely spoken languages like English, Spanish, French, and Mandarin to regional dialects, adapting to subtle variations in speech patterns and rhythms.

"This brought our campaigns closer to each audience with unmatched precision." – Kedar Ravangave, Head of Brand and Category marketing, Amazon IN

The versatility of Syncmonster also shines in music videos and rap content, where precise timing is crucial. It’s an invaluable tool for businesses aiming to break into international markets.

Multi-speaker Synchronization

Syncmonster AI also excels in managing videos featuring multiple speakers or faces. It can automatically sync individual voices to specific faces in group settings . The technology distinguishes between different speakers and applies accurate lip movements to each person. If needed, users can manually adjust face-to-voice matches for even greater accuracy in complex scenarios. Additionally, it preserves fine facial details, ensuring a polished final product.

Facial Expression and Emotion Preservation

To cater to varying needs, Syncmonster AI offers two processing modes. Blitz Mode delivers quick results, ideal for frontal videos with a single person, while Pro Mode focuses on creating highly realistic effects which need high amount of lipsync control. Syncmonster handles challenging conditions like head movements, facial hair, and non-frontal angles, ensuring smooth and accurate lip-syncing. It also maintains natural facial expressions, making the final output look authentic rather than robotic.

This feature is particularly useful for product demonstrations and advertising campaigns. As Daniel Cherian, a syncmonster user, explains, "The user interface is very friendly, it delivers impressive results with excellent video output".

3. Sync.so (Formerly Synclabs)

Sync.so is a tool tailored for developers, offering an API-driven solution for scalable lip-syncing. At the heart of its technology is the Lipsync-2 AI model, which does fast lipsync for creator videos. As Sync.so explains:

"The lipsync model can do real time processing without needing to train on speakers."

That said, some users have noticed occasional visual artifacts in the videos, which might be a trade-off for the model’s distinctive capabilities.

Language Support and Multi-Lingual Features

Sync.so supports a variety of languages for dubbing, ensuring that the visual and audio elements stay in sync. This feature is particularly helpful for creators looking to connect with audiences worldwide while maintaining professional-quality content.

Real-Time Processing and Latency

Sync.so prioritizes precise lip synchronization, aligning audio and visuals seamlessly. While higher-quality outputs may require more processing time, its powerful API integrates smoothly with industry-standard editing tools. According to NVIDIA research, using GPU servers can cut rendering time by 40% compared to traditional CPU servers, making the process faster and more efficient.

These strategies also allow Sync.so to maintain natural facial expressions, adding to its appeal for creators aiming for authenticity.

sbb-itb-818e448

4. HeyGen

HeyGen is an AI-powered video platform trusted by several users. Combining cutting-edge AI with an easy-to-use interface, HeyGen delivers professional-grade lip-sync results for avatars. As Shivali G., a satisfied user on Product Hunt, shared:

"HeyGen powers our human-like avatars with perfect lip-sync. It’s less complex and more intuitive than other AI tools we tried."

Language Support and Multi-Lingual Capabilities

HeyGen supports translations in multiple languages and dialects. It can automatically detect the language in your video or let you manually select it. From there, it translates both audio and on-screen text into your desired language. What’s more, users can choose regional accents – like Mexican or Argentinian Spanish – making translations feel authentic. Enterprise users can even upload custom voice files or select a brand-specific voice.

For best results, HeyGen recommends facing the camera directly and keeping background noise to a minimum. Videos can be uploaded directly or via links from platforms like YouTube and Google Drive.

Real-Time Processing and Latency

HeyGen simplifies the lip-sync process with its AI technology, which automatically generates precise lip movements. To achieve the best synchronization, it’s helpful to use clear audio and a quality microphone. The platform also allows users to fine-tune lip movements, giving creators control over synchronization accuracy to meet their specific needs.

Facial Expression and Emotion Preservation

One of HeyGen’s standout features is its ability to maintain the natural appearance of original videos during lip-syncing. Its AI avatars come with customizable facial expressions, offering a new level of personalization. Joseph S., a user on G2, praised this quality, saying:

"HeyGen’s avatars are incredibly lifelike, with natural movements and expressions. I was blown away by the seamless lip-sync. I can input my script and generate a polished video in no time."

This attention to detail extends to preserving emotional authenticity, which is especially useful in contexts like educational content. By maintaining visual continuity, HeyGen ensures viewers stay engaged without being distracted by mismatched audio. Many users have reported that their clients couldn’t distinguish HeyGen’s AI avatars from real presenters – a testament to its ability to replicate natural expressions and emotional nuances. The platform also lets creators fine-tune movements and expressions, ensuring the final output aligns perfectly with the intended tone of the original content.

Next, we’ll explore the final tool that continues to push the boundaries of AI-driven lip-sync technology.

5. Hyra

Hyra’s AI engine is designed to deliver fast, synchronized outputs, cutting down on delays in video production workflows. By leveraging its strength in quick AI responses, Hyra brings these advantages to video lip sync tasks, ensuring smoother synchronization with minimal lag.

Real-Time Processing and Latency

With real-time processing, Hyra elevates video lip sync by significantly reducing delays during dubbing. Its streamlined system ensures instant, precise synchronization, improving accuracy and making it a reliable solution for seamless lip sync across various video production projects.

Tool Comparison Chart

Here’s a quick overview of the key features that set these AI lip sync tools apart. Use this chart to find the one that fits your needs for multilingual, real-time, and multi-speaker projects.

| Feature | VisualDub by NeuralGarage | Syncmonster AI | Sync.so | Heygen | Hyra |

|---|---|---|---|---|---|

| Language Support | 35+ languages with AI-driven visual localization | Advanced multilingual dubbing, preserves context | Covers major languages | Multilingual dubbing with limited translation support | – |

| Real-Time Processing | Seamless integration with existing workflows | Blitz mode and pro mode | – | – | – |

| Multi-Speaker Support | Advanced dialogue replacement without reshoots | Frame by frame control | – | Customizable voices, tones, and languages | – |

| Emotion Preservation | Studio-quality authenticity with visual localization | – | – | – | – |

| Best For | Film studios, OTT platforms, ad agencies | Content creators and digital marketing agencies needing flexible processing modes | API based workflows | Multilingual video creation with speaker variety | – |

Key Takeaways from the Chart

Language Support is one of the most important features for these tools. VisualDub leads the pack with support for over 35 languages and AI-powered visual localization. Syncmonster AI also excels here, offering advanced multilingual dubbing that keeps the original context intact. Heygen, while multilingual, has more limited translation capabilities.

For real-time processing, Syncmonster AI stands out with its dual modes: a fast mode for quick results and a precision mode for detailed work. This flexibility can be a game-changer for creators working on tight deadlines.

When it comes to multi-speaker support, VisualDub, Syncmonster AI and Heygen offer unique advantages. Syncmonster AI allows manual voice assignments, simplifying work on multi-character projects. Meanwhile, Heygen provides options to customize voices, tones, and languages for different speakers in the same video [21].

Emotion preservation is where only VisualDub shines. It delivers studio-quality results by maintaining the authenticity of the original speaker’s delivery. This is especially important for branded content or projects that rely heavily on emotional impact.

Lastly, seamless integration with existing workflows can save time and resources. Tools like VisualDub and Syncmonster AI offer features that align well with professional video production pipelines, reducing the need for extensive training or setup.

Each tool has its strengths, so choosing the right one depends on your specific production needs, whether it’s language breadth, real-time capabilities, or emotional depth.

Conclusion

To wrap it all up, selecting the right AI lip sync tool boils down to your production needs and budget.

VisualDub by NeuralGarage stands out for delivering studio-quality results with emotional depth in over 35 languages. It’s a top pick for advertising agencies and professional film studios along with OTT platforms where preserving the original speaker’s delivery is a priority.

For projects involving multiple characters, both VisualDub and Syncmonster AI shines with its ability to handle multiple faces at once. On the other hand, Sync.so is perfect for developers, thanks to its robust API integration and scalability.

If multilingual accuracy and seamless lip sync are your goals, HeyGen is a strong contender. Meanwhile, Hyra is tailored for more specialized production requirements.

Traditional video production costs can range anywhere from $800–$1,000 per minute for simpler videos to over $10,000 per minute for more complex ones. By cutting production costs by up to 40% and saving up to 50% in time, these AI tools are reshaping the economics of video creation.

For film dubbing, prioritize tools with advanced voice cloning and precise lip sync to maintain authenticity. Live streaming creatives will benefit from real-time translation and API solutions, while multilingual content creators should look for tools offering broad language support with emotional accuracy.

Consider your usage patterns – whether a subscription plan is better for regular projects or a pay-per-use model suits occasional needs. Testing with short clips before committing to larger projects can help ensure the tool aligns with your expectations. Combining AI tools with human oversight often leads to the best results.

As AI lip sync technology continues to advance, each tool brings unique strengths to the table. The key is to match the tool to your production scale, quality requirements, and integration preferences.

FAQs

How does VisualDub use AI to enhance video production quality and save time?

VisualDub uses AI-driven lip-sync technology to revolutionize video production by automating the alignment of audio with on-screen lip movements. Its advanced algorithms ensure that voiceovers match the speaker’s lips perfectly, delivering a smooth and natural viewing experience. This eliminates the clunky, mismatched look that often comes with traditional dubbing, making content more engaging and easier to follow.

By streamlining this process, VisualDub not only improves the quality of multilingual videos but also cuts down on production time and expenses. This allows filmmakers, content creators, and marketers to dedicate more energy to creativity while producing polished, professional videos faster.

What should I look for in an AI tool for multilingual video projects?

When choosing an AI tool for multilingual video projects, there are a few important factors to keep in mind. First and foremost, accuracy is essential. You want translations that are not only correct but also preserve the original meaning, including subtle cultural details.

Make sure the tool supports all the languages required for your project. It’s also worth paying attention to the quality of the voiceovers – they should sound natural and align smoothly with the visuals for a professional finish.

Lastly, think about the tool’s efficiency. It should integrate easily with your workflow, save you time, and cater to regional preferences – all while staying within your budget.

How do AI tools maintain natural facial expressions and emotions during lip-syncing?

AI tools leverage sophisticated machine learning algorithms to study the original video, capturing natural facial expressions and emotions to perfect the lip-sync process. By analyzing the speaker’s distinct facial movements, subtle micro-expressions, and emotional nuances, these tools ensure that the synchronized lip movements align with the performance’s tone and context.

Many systems also incorporate input from animators or undergo fine-tuning to refine the expressions further. This approach helps produce a final output that not only appears accurate but also feels natural, staying true to the original intent of the video.

Related Blog Posts

- How to Sync Audio and Video for Professional Dubbing

- Common Video Dubbing Questions Answered

- Top premium Lipsync software 2025

- AI Lip-Sync for Multilingual Video: Key Benefits

https://app.seobotai.com/banner/banner.js?id=68369b5cd3b966198185e147

Leave a comment