Global audiences expect content in their native language – but traditional localization methods can’t keep up. Manual video dubbing takes weeks, costs thousands per video, and often produces awkward mismatches between audio and visuals. Subtitles are faster and cheaper, but viewers barely engage with them.

AI lip-sync technology is changing the game. By automatically syncing translated dialogue with on-screen lip movements, it delivers the engagement of professional dubbing at a fraction of the cost and time.

In Short (TL;DR):

- AI lip-sync produces more natural, engaging multilingual video than traditional video localization methods.

- It speeds up localization significantly, with industry reports noting up to 80% faster production.

- AI lip-sync cuts costs by eliminating reshoots and repetitive editing while scaling efficiently across many languages to support global video expansion.

- Platforms like VisualDub offer studio-quality lip-sync, 32-bit EXR support, 50+ languages, and smooth workflow integration, enabling cinematic multilingual content without reshoots.

Here’s how AI lip-sync stacks up against traditional methods:

Quick Comparison of Localization Methods

| Method | Cost | Speed | Engagement | Accuracy |

| Subtitles | Low | Very High | Low | High |

| Manual Dubbing | High | Slow | Average | Low |

| AI Lip-Sync | Low | High | Ultra High | High |

The comparison makes it clear: AI lip-sync technology delivers the best balance of speed, cost-efficiency, audience engagement, and accuracy for video localization.

Before exploring how AI lip-sync solves these issues, it’s worth understanding why traditional video localization methods fall short.

Problems with Standard Video Localization Methods

Traditional video localization methods come with hefty price tags and sluggish timelines. These challenges become even more pronounced when businesses aim to expand their content into multiple languages and regions.

Here’s where the main challenges lie in production, synchronization, and expansion:

1. Expensive and Slow Production

Manual dubbing is a labor-intensive process, requiring coordination between translators, voice actors, sound engineers, and quality assurance teams, each adding to the overall complexity and cost. For example, dubbing a 15-minute English video into Spanish can cost around $2,000, while subtitling the same video costs about $200.

On top of the high costs and labor required, dubbing is notoriously lengthy, often taking weeks or months. Each step, from translation and casting to recording and editing, adds complexity. Juggling multiple languages, studios, and quality standards makes the process even slower and more prone to mismatched audio and visuals.

2. Poor Audio-Visual Matching

One of the most glaring issues with traditional dubbing is the lack of synchronization between the dubbed audio and the on-screen lip movements. Achieving accurate lip-sync is difficult because languages differ in rhythm, syllable counts, and sentence structures, which directly affect timing.

Additionally, literal translations often fail to capture emotional nuance or regional subtleties. This forces a trade-off between accuracy and natural-sounding dialogue, leaving content that can feel disconnected from the visuals. Synchronization challenges not only hurt viewer engagement but also make it harder to scale content for global audiences.

3. Hard to Scale Across Languages

Scaling video localization across multiple languages is another uphill battle. The costs, time demands, and difficulty of maintaining consistent quality all increase as more languages are added.

Each language requires its own team of translators, voice actors, and quality assurance experts. For less common languages, finding qualified professionals can be even harder, leading to either quality compromises or extended timelines. On top of everything, ensuring content resonates with local audiences and respects regional sensitivities becomes more complex as the number of target languages grows.

How AI Lip-Sync Technology Fixes These Problems

Let’s look at how AI-powered lip-sync directly solves the localization challenges outlined above:

1. Accurate Lip Movement Matching

One of the standout features of AI lip-sync technology is its ability to precisely replicate mouth movements using advanced machine learning algorithms. Traditional dubbing struggles with mismatched audio and visuals. AI fixes this by syncing mouth movements to translated dialogue, keeping emotions consistent.

Studies indicate that when audio and visuals are perfectly synchronized, audiences are far more likely to watch videos to completion. According to recent studies, modern AI tools achieve accuracy rates as high as 95%, making this seamless viewing experience possible at scale.

2. Faster Production Times

AI dramatically reduces the time required to sync translated audio with lip movements, processing one minute of content in just 10 seconds. By automating tasks like analyzing raw footage, generating cuts, suggesting transitions, and identifying the best shots, AI significantly speeds up production timelines, with industry reports citing gains of up to 80%.

3. Lower Costs and Easier Scaling

Shorter production times naturally lead to cost savings, especially when scaling content for multilingual audiences. Traditional dubbing often involves expensive reshoots and complex editing processes, but AI simplifies these steps, making video localization more cost-effective.

The scalability of AI lip-sync technology is reflected in industry trends, where organizations adopting AI localization often report outcomes such as:

- 20 to 40% increases in website visits and viewer return rates after adding new language versions

- Up to 30× improvements in short-form video performance across multilingual channels

- 10 to 20% increases in regional engagement after introducing localized content

- Up to 10× reductions in localization costs over multi-year periods

- Automation of 10 to 20% of recurring translation workflows within the first few months

“Organizations that embrace these advancements will not only meet the needs of today’s multilingual world but also lead the charge in shaping a more interconnected future.” – KUDO

Main Features of AI Lip-Sync Platforms

If you’re evaluating AI lip-sync tools for your production workflow, features lists can blur together quickly. Here’s what actually separates platforms that deliver broadcast-ready results from those that don’t.

1. Professional-Grade Video Quality

Modern AI lip-sync platforms ensure that the quality of the original video remains intact while delivering precise synchronization. By leveraging optimal lighting and high-definition inputs, these tools enhance facial detection and lip movement accuracy.

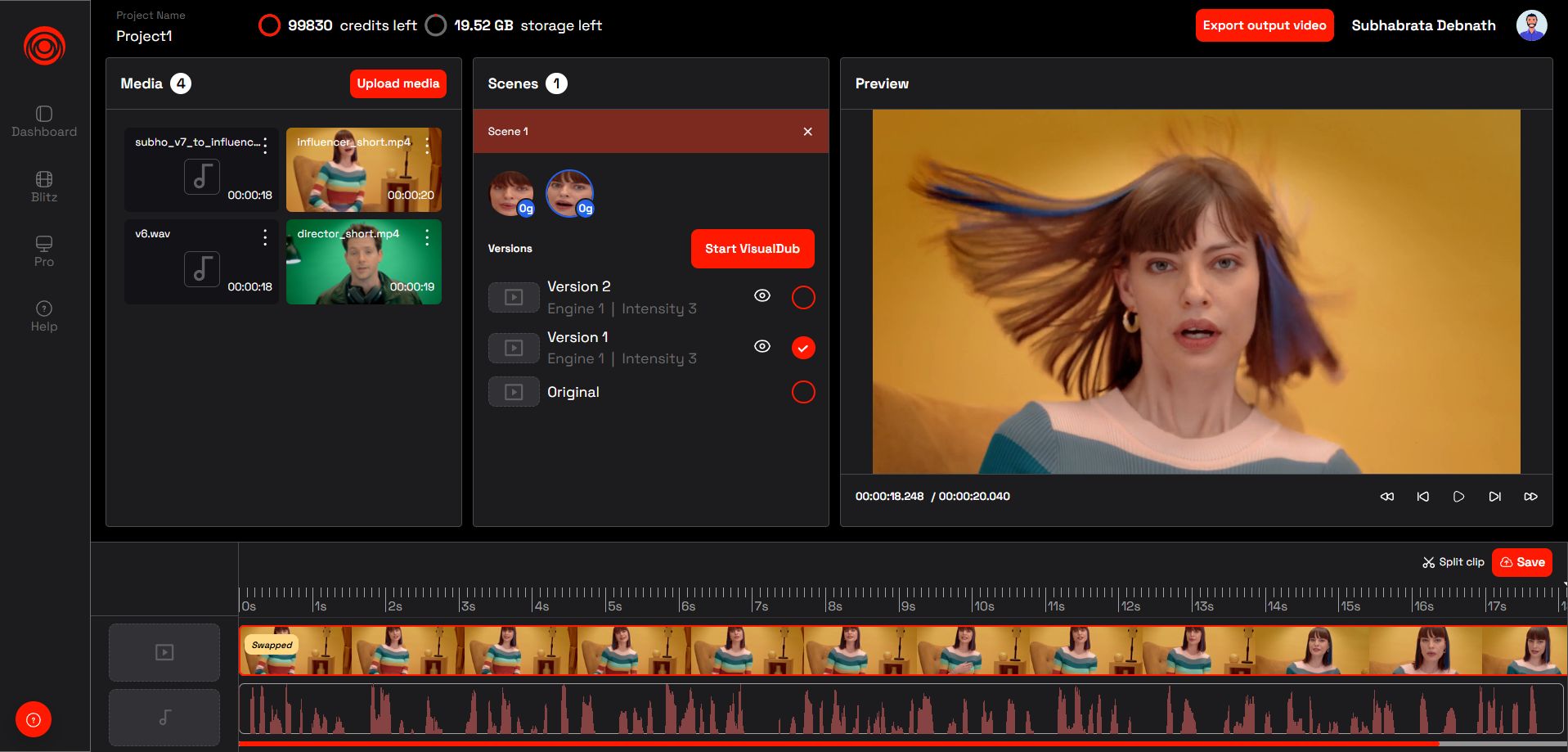

For instance, VisualDub by NeuralGarage supports 32-bit EXR files, enabling cinema-quality visuals suitable for theatrical productions shot with high-end cameras. Advanced machine learning models analyze mouth movement patterns for various sounds, preserving the authenticity of the original performance while reducing costly rework.

3. Multilingual Capabilities and Customization

One of the standout features of AI lip-sync platforms is their ability to support multiple languages. Leading platforms like VisualDub offer compatibility with over 50 languages, making it easier for businesses to connect with global audiences while bypassing the traditional hurdles of cost and complexity.

These platforms go beyond simple translation, including tools like voice cloning, custom pronunciation adjustments, and voice modulation. Hyper-personalization features enable businesses to craft targeted messages that resonate with diverse cultural audiences, while dialogue replacement functionality eliminates the need for expensive reshoots.

3. Seamless Integration with Existing Workflows

AI lip-sync platforms are designed to integrate effortlessly into current production systems. Platforms like VisualDub offer API integration, allowing ad agencies, film studios, and OTT platforms to incorporate advanced lip-sync technology without disrupting their established workflows.

This seamless integration can reduce overall production time significantly, with AI processing one minute of content in just 10 seconds. Cost savings are equally impressive, AI video dubbing services start at just $1.50 per minute, a fraction of traditional methods.

Business Benefits of AI Video Localization

Switching to AI-powered video localization isn’t just a tech upgrade – it’s a game-changer for businesses. Companies embracing this technology are seeing real results: stronger viewer connections, quicker global growth, and smarter resource use.

1. Better Viewer Engagement

When content speaks your audience’s language – literally – it resonates more. Native-language videos with accurate lip-syncing build trust and keep viewers engaged. AI lip-sync technology ensures the audio and visuals align perfectly, creating a smooth, natural viewing experience that avoids the awkwardness of traditional dubbing.

Educators and online creators often see major gains when they localize content with AI, from faster workflows to significantly reduced costs. This allows them to offer multilingual learning experiences without adding operational overhead.

When viewers feel connected, businesses are primed for growth – and that growth can happen fast.

2. Faster International Growth

AI lip-sync technology eliminates the usual delays of traditional localization methods. This speed gives companies the agility to act on market opportunities as they emerge.

Consider this: the global video translation service market is expected to hit $6.5 billion by 2030, growing at an annual rate of 14.3%. Companies using AI localization are better positioned to grab a larger slice of this booming market, thanks to their ability to move faster than competitors sticking to older methods.

For instance, one online educator used AI localization to streamline course creation and reduce expenses. By converting lessons into multiple languages quickly, they expanded their global reach without sacrificing quality.

Industry reports and case studies frequently cite time-savings of up to 80% when teams transition from manual workflows to AI-assisted production. This quick turnaround allows them to respond to trends and seasonal demands effectively. For instance, a retail startup using AI-created short videos for TikTok saw a 25% jump in conversion rates. Similarly, fashion brands using AI video content experienced 3.2x higher engagement rates and a 27% boost in average order values compared to static images.

Faster localization doesn’t just drive growth – it saves money, too.

3. Cost Reduction and Better Resource Use

AI localization isn’t just about speed and engagement; it’s also a budget-friendly solution. Some vendors report reductions of more than 50% in localization resource needs. For example, one provider claims to have reduced human translator headcount by 76% using their AI-driven M3 Framework, a shift that significantly lowers long-term localization costs.

The efficiency gains are striking. In Q1 2025, Ollang’s M3 Framework helped a media company reduce its translator team from 886 to 213 while producing the same volume of content in four languages. Another media partner used AI dubbing to localize over 600 hours of documentaries – a task that would have taken months and significant expense with traditional methods.

Advanced tools like VisualDub bring even more precision, offering cinema-grade results that meet the high standards of major film studios and streaming platforms. For instance, a Rome-based film company combined AI subtitling with professional oversight to achieve high-quality localization at a fraction of the cost.

“Balance opportunities against risks. Use AI strategically to reach new markets faster, but prioritize quality and accessibility.” – Vanessa Lecomte, Localization Operations Manager, BBC Studios

And the consumer demand is clear: 76% of buyers prefer products with instructions in their native language, and 84% say videos have influenced their purchasing decisions. AI localization allows businesses to meet these preferences without ballooning their budgets.

Conclusion: What’s Next for Multilingual Video Localization

AI-powered lip-sync technology is transforming global video content. Companies are cutting localization costs, achieving 95% accuracy levels, and boosting engagement. With the AI dubbing market projected to hit $2.9 billion by 2033, this shift is here to stay.

What sets this technology apart is its ability to scale across languages while preserving emotional and cultural nuances. When visual and auditory elements sync perfectly, viewers watch longer and convert better – crucial when 55% of global consumers prefer product information in their native language.

The future is expanding rapidly. Real-time multilingual video generation is enabling instant translation for live streams, while tools are becoming increasingly context-aware, interpreting tone, intent, and cultural cues with greater precision.

Platforms like VisualDub are leading the charge, delivering studio-quality lip-sync in over 50 languages while maintaining cinematic standards. With support for up to 32-bit EXRs, the technology is versatile enough for everything from social media clips to blockbuster films.

For companies considering AI localization, starting small with pilot projects allows you to test the technology against your specific requirements. If you’re evaluating AI localization, a demo is the best way to understand what’s possible.

Book a call with us to see how VisualDub delivers visually authentic lip-sync for your localization needs.

FAQs

Q 1. How does AI-powered lip-sync enhance viewer engagement compared to traditional dubbing or subtitles?

AI lip-sync enhances engagement by making translated videos look more natural and visually aligned with the audio. Instead of mismatched dubbing or distracting subtitles, the lip movements match the spoken language closely, creating a smoother and more immersive experience.

Because the process is automated, AI lip-sync also speeds up video localization while maintaining high visual consistency. This helps brands deliver multilingual content faster, improving viewer retention and connection across global audiences.

Q 2. What are the cost and time-saving advantages of using AI lip-sync technology for multilingual video production?

AI lip-sync reduces both cost and production time by automating traditionally manual localization tasks. Brands no longer need multiple voice actors or expensive reshoots, making the process far more budget-friendly.

By automating transcription, syncing, and language adaptation, AI shortens the entire workflow. This allows teams to produce multilingual videos faster, scale into new markets quickly, and maintain consistent quality across all languages.

Q 3. How does AI lip-sync technology ensure accurate synchronization of lip movements with translated audio in different languages?

AI lip-sync tools use machine learning to analyze speech, facial movements, and articulation, generating lip movements that match the translated audio realistically. This eliminates manual adjustments or reshoots.

By preserving emotional nuance and timing, the technology produces smooth, believable multilingual video content that feels natural to viewers, improving accessibility, engagement, and localization accuracy.